Are our schools making the kids we think we should? The tussle between politics and education continues and Latham is just the blunt end of what is now the assumed modus operandi of school policy in Australia.

Many readers of this blog no doubt will have noticed a fair amount of public educational discussion about NSW’s School Success Model (SSM) which, according to the Department flyer, is ostensibly new. For background NSW context, it is important to note that this policy was released in the context of a new Minister for Education who has openly challenged educators to ‘be more accountable’, alongside of an entire set of parliamentary educational inquiries set up to appease Mark Latham, who chairs a portfolio committee with a very clear agenda motivated by the populism of his political constituency.

This matters because there are two specific logics used in the political arena that have been shifted into the criticisms of schools: the public dissatisfaction leading to accountability question (so there’s a ‘public good’ ideal somewhere behind this), and the general rejection of authorities and elitism (alternatively easily labelled anti-intellectualism.) Both of these political concerns are connected to the School Success Model. The public dissatisfaction is motivating the desire for measures of accountability that the public believes can be free of tampering, and ‘matter’. Test scores dictating students’ futures, so they matter, etc. The rejection of elitism is also embedded in the accountability issue. That is due to a (not always unwarranted) lack of trust. That lack of trust often gets openly directed to specific people.

Given the context, while the new School Success Model (SSM) is certainly well intended, it also represents one of the more direct links between politics and education we typically see. The ministerialisation of schooling is clearly alive and well in Australia. This isn’t the first time we have seen such direct links – the politics of NAPLAN was, afterall, straight from the political intents of its creators. It is important to note that the logic at play has been used by both major parties in government. Implied in that observation is that the systems we have live well beyond election cycles.

Now in this case, the basic political issues how to ‘make’ schools rightfully accountable, and at the same time push for improvement. I suspect this are at least popular sentiments, if not overwhelmingly accepted as a given by the vast majority of the public. So alongside from general commitments to ‘delivering support where it is needed, and ‘learning from the past’, the model is most notable for it main driver – a matrix of measures ‘outcome’ targets. In the public document that includes targets are the systems level and school level – aligned. NAPLAN, Aboriginal Education, HCS, Attendance, Students growth (equity), and Pathways are the main areas specified for naming targets.

But, like many of the other systems created with the same good intent before it, this one really does invite the growing criticism already noted in public commentary. Since, with luck, public debate will continue, here I would like to put some broader historical context to these debates, take a look under the hood of these measures to show why they really aren’t fit for purpose for school accountability purposes without far more sophisticated understanding of what they can and can not tell you.

In the process of walking through some of this groundwork, I hope to show why the main problem here is not something a reform here or there will change. The systems are producing pretty much what they are designed to do.

On the origins of this form of governance

Anyone who has studied the history of schooling and education (shockingly few in the field these days) would immediately see the target-setting agenda as a ramped up version of scientific-management (see Callaghan, 1962), blended with a bit of Michael Barber’s methodology for running government (Barber, 2015), using contemporary measurements.

More recently, at least since the then labelled ‘economic rationalist’ radical changes brought to the Australia public services and government structures in the late 1980s and early 1990s, the notion of measuring outcomes of schools as a performance issue has matured, in tandem with the past few decades of an increasing dominance of the testing industry (which also grew throughout the 20th century). The central architecture of this governance model would be called neo-liberal these days, but it is basically a centralised ranking system based on pre-defined measures determined by a select few, and those measures are designed to be palatable to the public. Using such systems to instil a bit of group competition between schools fits very well with those who believe market logic works for schooling, or those who like sport.

The other way of motivating personnel in such systems is, of course, mandate, such as the now mandated Phonic Screening Check announce in the flyer.

The devil in details

Now when it comes to school measures, there are many types we actually know a fair amount about most if not all of them – as most are generated from research somewhere along the way. There are some problems of interpretation that all school measures face which relate the basic problem that most measures are actually measures of individuals (and not the school), or vice-versa. Relatedly, we also often see school level measures which are simply the aggregate of the individuals. In all of these cases, there are many good intentions that don’t match reality.

For example, it isn’t hard to make a case for saying schools should measure student attendance. The logic here is that students have to be at school to learn school things (aka achievement tests of some sort). You can simply aggregate individual students attendance to the school level and report it publicly (as on MySchool), because students need to be in school. But it would be a very big mistake to assume that the school level aggregated mean attendance of the student data is at all related to school level achievement. It is often the case that what is true for individual, is also not true for the collective in which the individual belongs. Another case in point here is policy argument that we need expanded educational attainment (which is ‘how long you stay in schooling’) because if more people get more education, that will bolster the general economy. Nationally that is a highly debatable proposition (among OECD countries there isn’t even a significant correlation between average educational attainment and GDP). Individually it does make sense – educational attainment and personal income, or individual status attainment is generally quite positively related. School level attendance measures that are simple aggregates are not related to school achievement (Ladwig and Luke, 2011). This may be why the current articulation attendance target is a percentage of students attending more than 90% of the time (surely a better articulation than a simple average – but still an aggregate of untested effect). The point is more direct – often these targets are motivated by an goal that has been based on some causal idea – but the actually measures often don’t reflect that idea directly.

Another general problem, especially for the achievement data, is the degree to which all of the national (and state) measures are in fact estimates, designed to serve specific purposed. The degree to which this is true varies from test to test. Almost all design options in assessment systems carry trade offs. There is a big difference between an HSC score – where the HSC exams and syllabuses are very closely aligned and the student performance is designed to reflect that; as opposed to NAPLAN, which is designed to not be directly related to syllabuses but overtly as a measure designed to estimate achievement on an underlying scale that is derived from the populations. For HSC scores, it makes some sense to set targets but notice those targets come in the forms of percentage of students in a given ‘Band.’

Now these bands are tidy and no doubt intended to make interpretation of results easier for parents (that’s the official rational). However, both HSC Bands and NAPLAN bands represent ‘coarsened’ data. Which means that they are calculated on the basis of some more finely measured scale (HSC raw scores, NAPLAN scale scores). There are two known problems with coarsened data: 1) in general they increase measurement error (almost by definition), and 2) they are not static overtime. Of these two systems, the HSC would be much more stable overtime, but even there much development occurs overtime, and the actual qualitative descriptors of the bands changes as syllabuses are modified. So these band scores, and the number of students in each, is something that really needs to understood to be very less precise than counting kids in those categories implies. For more explanation and an example of one school which decides to change its spelling programs on the basis of needing one student to get one more item test correct, in order for them to meet their goal of having a given percentage of students in a given band, (see Ladwig, 2018).

There is a lot of detail behind this general description, but the point is made very clearly in the technical reports, such as when ACARA shifted how it calibrated its 2013 results relative to previous test years – where you find the technical report explaining that ACARA would need to stop assuming previous scaling samples were ‘secure’. New scaling samples are drawn each year since 2013. When explaining why they needed to estimate sampling error in a test that was given to all students in a given year, ACARA was forthright and made it very clear:

‘However, the aim of NAPLAN is to make inference about the educational systems each year and not about the specific student cohorts in 2013’ (p24).

Here you can see overtly that the test was NOT designed for the purposes to which the NSW Minister wishes to pursue.

The slippage between any credential (or measure) and what it is supposed to represent has a couple of names. When it comes to testing and achievement measurements, it’s called error. There’s a margin within which we can be confident, so analysis of any of that data requires a lot of judgement, best made by people who know what and who is being measured. But that judgement can not be exercised well without a lot of background knowledge that is not typically in the extensive catalogue of background knowledge needed by school leaders.

At a system level, the slippage between what’s counted and what it actually means is called decoupling. And any of the new school level targets are ripe for such slippage. Numbers of Aboriginal students obtaining an HSC is clear enough – but does it reflect the increasing numbers of alternative pathways used by an increasingly wide array of institutions? Counting how many kids continue to Year 12 make sense, but it also is motivation for schools to count kids simply for that purpose.

In short, while the public critics have spotted potential perverse unintended consequence, I would hazard a prediction that they’ve just covered the surface. Australia already has ample evidence of NAPLAN results being used as the based of KPI development with significant problematic side effects – there is no reason to think this would be immune from misuse, and in fact invites more (see Mockler and Stacey, 2021).

The challenge we need to take is not how to make schools ‘perform’ better or teachers ‘teach better’ – any of those a well intended, but this is a good time to point out common sense really isn’t sensible once you understand how the systems work. To me it is the wrong question to ask how we make this or that part of the system do something more or better.

In this case, it’s a question of how can we build systems in which school and teachers are rightfully and fairly accountable and in which schools, educators, students are all growing. And THAT question can not reached until Australia opens up bigger questions about curriculum that have been locked into what has been a remarkable resilience structure ever since the early 1990s attempts to create a national curriculum.

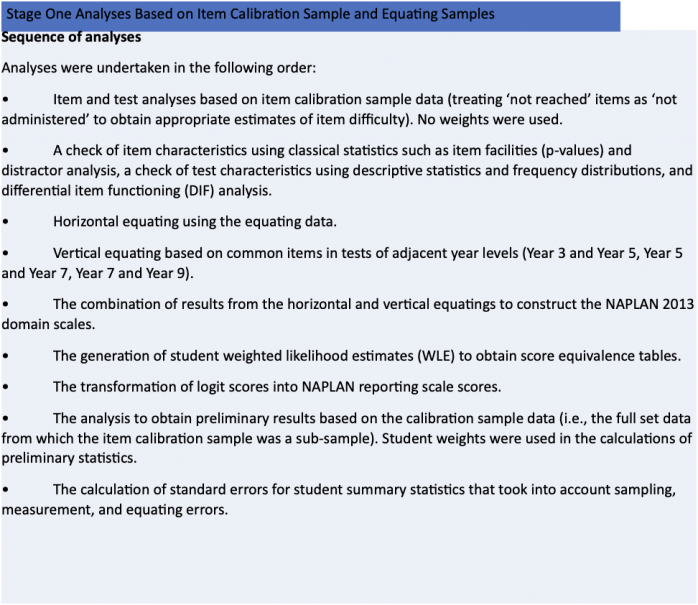

Figure 1 Taken from the NAPLAN 2013 Technical Report, p.19

This extract shows the path from a raw score on a NAPLAN test and what eventually becomes a ‘scale score’ – per domain. It is important to note that the scale score isn’t a count – it is based on a set of interlocking estimations that align (calibrate) the test items. That ‘logit’ score is based on the overall probability of test items being correctly answered.

James Ladwig is Associate Professor in the School of Education at the University of Newcastle and co-editor of the American Educational Research Journal. He is internationally recognised for his expertise in educational research and school reform. Find James’ latest work in Limits to Evidence-Based Learning of Educational Science, in Hall, Quinn and Gollnick (Eds) The Wiley Handbook of Teaching and Learning published by Wiley-Blackwell, New York (in press). James is on Twitter @jgladwig

References

Barber, M. (2015). How to Run A Government: So that Citizens Benefit and Taxpayers Don’t Go Crazy: Penguin Books Limited.

Callahan, R. E. (1962). Education and the Cult of Efficiency: University of Chicago Press.

Ladwig, J., & Luke, A. (2013). Does improving school level attendance lead to improved school level achievement? An empirical study of indigenous educational policy in Australia. The Australian Educational Researcher, 1-24. doi:10.1007/s13384-013-0131-y

Ladwig, J. G. (2018). On the Limits to Evidence‐Based Learning of Educational Science. In G. Hall, L. F. Quinn, & D. M. Gollnick (Eds.), The Wiley Handbook of Teaching and Learning (pp. 639-658). New York: WIley and Sons.

Mockler, N., & Stacey, M. (2021). Evidence of teaching practice in an age of accountability: when what can be counted isn’t all that counts. Oxford Review of Education, 47(2), 170-188. doi:10.1080/03054985.2020.1822794

Main image:

| Description | English: Australian politician Mark Latham at the 2018 Church and State Summit |

| Date | 15 January 2018 |

| Source | “Mark Latham – Church And State Summit 2018”, YouTube (screenshot) |

| Author | Pellowe Talk YouTube channel (Dave Pellowe) |

Don Carter is senior lecturer in English Education at the University of Technology Sydney. He has a Bachelor of Arts, a Diploma of Education, Master of Education (Curriculum), Master of Education (Honours) and a PhD in curriculum from the University of Sydney (2013). Don is a former Inspector, English at the Board of Studies, Teaching & Educational Standards and was responsible for a range of projects including the English K-10 Syllabus. He has worked as a head teacher English in both government and non-government schools and was also an ESL consultant for the NSW Department of Education. Don is the secondary schools representative in the Romantic Studies Association of Australasia and has published extensively on a range of issues in English education, including The English Teacher’s Handbook A-Z (Manuel & Carter) and Innovation, Imagination & Creativity: Re-Visioning English in Education (Manuel, Brock, Carter & Sawyer).

Don Carter is senior lecturer in English Education at the University of Technology Sydney. He has a Bachelor of Arts, a Diploma of Education, Master of Education (Curriculum), Master of Education (Honours) and a PhD in curriculum from the University of Sydney (2013). Don is a former Inspector, English at the Board of Studies, Teaching & Educational Standards and was responsible for a range of projects including the English K-10 Syllabus. He has worked as a head teacher English in both government and non-government schools and was also an ESL consultant for the NSW Department of Education. Don is the secondary schools representative in the Romantic Studies Association of Australasia and has published extensively on a range of issues in English education, including The English Teacher’s Handbook A-Z (Manuel & Carter) and Innovation, Imagination & Creativity: Re-Visioning English in Education (Manuel, Brock, Carter & Sawyer).