Jason Clare’s announced plans to dissolve ACARA, AITSL, ESA, and AERO, into the Teaching and Learning Commission raises questions regarding the need and function, and also what the focal issues are and how they may be addressed.

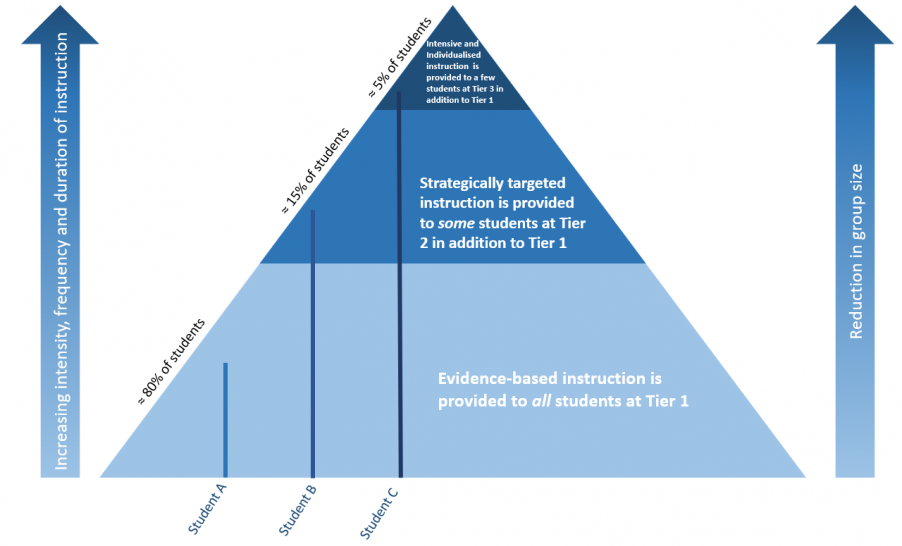

The Teaching and Learning Commission will seek to address issues of inequity and student attrition from public schools through increased standardisation of teaching with greater emphasis on explicit instruction, literacy teaching narrowed to phonics instruction, and classroom management.

The Mpartwe Declaration set out the Australian goals for schooling as:

Goal 1: The Australian education system promotes excellence and equity

Goal 2: All young Australians become:

- confident and creative individuals

- successful lifelong learners

- active and informed members of the community.

As one of the most inequitable schooling systems in the world, Australia has a long way to go in achieving these goals. Australia’s response has been to double down on standardisation, though is standardisation a solution or simply creating and exacerbating the issue?

Will increased standardisation raise student academic achievement?

Sally Larsen has repeatedly shown the claims for falling achievement are inaccurate, yet these claims continue as the basis for changes in policy, practice, and oversight. The tenacious hold to these claims raises questions as to motives well beyond student achievement.

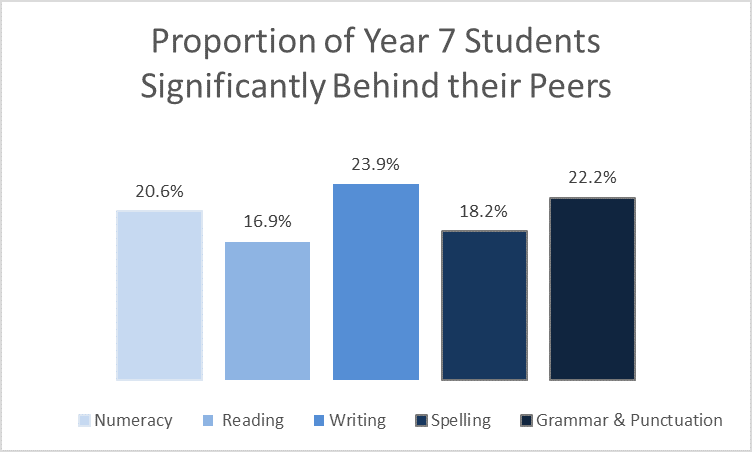

The issue that needs exploration and discussion is what achievement can and should be. Central to standardisation is the focus on narrowed areas for learning, primarily literacy and numeracy. With narrowed focus comes narrowed approaches designed with the intention of high achievement for all. Lost is recognition for learning beyond the narrowed focus, for example Australian students’ achievement in creativity. Also lost is the value for human growth and the purpose of schooling reaching well beyond learning.

Will the Teaching and Learning Commission open discussion as to the purpose of schooling and education more broadly and in turn open a way for a diverse array of success?

Will increased standardisation reduce student exodus from public schools?

Jason Clare’s creation of the Teaching and Learning Commission seeks to address rising rates of school dropout and attrition from public sector schools. Before doubling down on standardisation which has been growing for over a decade (the same time in which concerns for achievement, equity, and behaviour have risen), it would be helpful to look more closely at why children and young people are turning away from public schools and what they are turning to.

Home education and special assistance schools are the antithesis of standardisation, yet are the fastest growing sectors in education.

When I explored the experiences of families who home educate, the random selection of families showed standardisation to be the central factor that ‘pushed’ them into home education. Home education had not been an active choice, rather a last option the families felt pushed into taking as the standardised approaches at school were not meeting the needs of their children. Home education is the fastest growing sector of education and anecdotal evidence so far suggests the recent (post pandemic) upswing is in response to the increasingly standardised schooling not meeting the diversity of student needs.

Within independent schools, the fastest growing area is special assistance schools. When considering the attrition of public school students, it is important to recognise that not all independent schools are the same. Independent schools are more often thought about in the debate over funding and assertions of ‘double dipping’ into school funding and high parent fees. The vast majority of independent schools however, are low fee schools, and some (a growing number) are free providing the flexibility and responsiveness public schools were unable to provide.

Will the Teaching and Learning Commission explore the qualitative research that provides nuanced understanding as to why students are leaving public schools, and in turn support public schools to flexibly respond to the diverse needs of students?

Will standardisation address Teacher Workload?

The announcement of the Teaching and Learning Commission comes hot on the heels of the recent interim report from the Productivity Commission which proposed a national database of lesson plans. A strong argument behind the provision of lesson plans for teachers is workload. A recent UK report into the impact of standardisation showed there was no difference on teacher workload between standardised and non-standardised approaches given the need for modification to meet student needs.

In my work with pre-service teachers I have found the necessity for them to modify externally developed lesson plans to be responsive to the range of learning, motivation, engagement, and developmental needs in a classroom takes longer than when they create their own lesson plans to meet the needs of the children they are working with.

The Productivity Commission seemingly ignored their own consultations where a key theme was the need for:

“Empowering teachers. Teachers should be supported with professional development to enhance their lesson planning skills (NCEC, qr. 29; Teach for Australia, qr. 31). Government policy should encourage innovation and flexibility in lesson design and delivery (AITSL, qr. 55; ESA, qr. 67).”

Standardisation is not about improved teaching nor teacher workload, rather it is a quest to ‘teacher-proof’ teaching. Here we might ask what are we ‘teacher-proofing’ from? Standardisation reduces the capacity for teachers to develop the Australian Professional Standards for Teachers. For example, how might a teacher develop to the level of Highly Accomplished Teacher when the standards require: “Exhibit innovative practice in the selection and organisation of content and delivery of learning and teaching programs”. Standardisation does not allow for innovation, and without innovation we will continue to replicate the status quo of inequity.

Will the Teaching and Learning Commission listen to teachers as to what is weighing them down in their workload to find ways to build time for the core work of teaching beyond the classroom?

Will increased standardisation of Initial Teacher Education Address Inequity?

A role for the Teaching and Learning Commission will be to double down on Initial Teacher Education to ensure compliance to the TEEP Report with focus on development of practical strategies for teaching and classroom management. The direction for increased standardisation in ITE has been widely critiqued not least for the lack of evidence on which claims have been based.

Initial Teacher Education is frequently landed with claims of teaching too much theory not enough practice. Such suggestions highlight a view of teaching as performance and not the complex relational interplay that teachers know all too well.

Standard 1 of the Australian Professional Standards for Teachers is ‘Know students and how they learn’. There is very good reason for this being the first standard – teaching is relational. The impact of teaching is dependent on the relationship built between teacher and students and across the learning environment. Underpinning how teachers form relationships is knowledge of learning theory, theories of development, and more. Value for theory to inform teaching practice is integral to pre-service teachers meeting the graduate standards.

Standardisation is the very thing we need to avoid in Initial Teacher Education and instead support teachers as intelligent, capable professionals to ‘know the students and how they learn’ to design teaching for diverse learning needs across varied contexts.

Will the Teaching and Learning Commission value the complex interplay of theory and practice in developing new teachers able to design for the diverse array of student needs into the future?

Will increased ‘Evidence-Based Practice’ Address Inequity?

The announcement on the creation of the Teaching and Learning Commission comes before the report on the inquiry into AERO. Though perhaps not before we know the findings.

AERO has been commended on the provision of “data-driven research and swift distribution of user-friendly advice for teachers”. This is an interesting and tautological claim given all research is data-driven, though highlights the value for specific data as promoted by AERO.

The pre-digestion of research disempowers teachers, seeking to simplify the complexity of teaching. Pre-digested research from AERO and other organisations such as The Grattan Institute have been critiqued heavily for the narrow selection of research (reliant on randomised controlled trials and meta-analyses), misrepresentation of research, reliance on self-referencing and oversimplification leading to errors. The reductionist view of research to directions for teachers to follow as per the emphasis on explicit instruction (or direct instruction as intended), removes teachers from a pedagogic role, reducing teaching to performance.

Will the Teaching and Learning Commission explore ways to support teachers to engage with research and be researchers to make decisions relevant to their students, and in turn re-position teaching as a desirable profession for people to join (and stay in)?

Not ‘what works’ but ‘what works here today’

Colleagues and I have been working with teachers, school leaders, and representatives of education organisations across the public, Catholic, and independent sectors, along with academics. Our aim has been to draw together researchers and educators to understand to how we may work together to raise awareness to the problems associated with reliance on a narrow view of evidence-based practice, and how we may open conversation for support and grow the enriched evidence-based practice of teaching.

We have found agreement across sectors as to the detrimental impact of evidence-based practice resulting in standardisation seen to exacerbate inequities in the constraints placed on schools to make decisions relevant to their contexts. While the dominant narrow view of evidence-based practice seeks ‘what works’ one school-based researcher told us the focus in schools is ‘what works here today’. Research can only ever provide insight to what has worked in the past whether that be years ago or yesterday. It is the role of those in schools to interpret research with the evidence from existing practice and evidence from students to determine what will work in their context at any given time.

The UK report into the impact of standardisation showed reduced self-efficacy and autonomy amongst teachers using standardised approaches. Self-efficacy and autonomy are essential to teacher ongoing professional learning that may enable equitable outcomes for all students. Autonomy has been raised throughout our work with teachers and school leaders where their emphasis has been on autonomy to engage with evidence for themselves, to be the decision makers and designers of teaching.

Will the Teaching and Learning Commission work to rebuild teacher professionalism through empowering them with autonomy to engage with the full scope of evidence in context to create teaching for learning?

Finally, will the Teaching and Learning Commission support schools to achieve the Australian goals for schooling? Not through further standardisation, no.

Nicole Brunker is a senior lecturer in the School of Education and Social Work, The University of Sydney. She was a teacher and principal before moving into Initial Teacher Education where she has led foundational units of study in pedagogy, sociology, psychology and philosophy. Her research interests include school experience, alternative paths of learning, Initial Teacher Education pedagogy, and innovative qualitative methodologies. She’s on LInkedIn and on X: